Fine-Tuning Datasets Used in AI-Generated Sexual Images Resembling Real Children; Datasets Being Sold Online

6:00 JST, June 3, 2024

AI training data that can be used to produce images closely resembling real children is being sold online, The Yomiuri Shimbun has learned.

The fine-tuning datasets include images of Japanese former child celebrities. Sexual images closely resembling the children were being sold on a separate website, and it is believed that the data was used to create them.

Regulating the trade of this type of data is said to be difficult under Japan’s law against child prostitution and child pornography, and experts are calling for the establishment of legislation to address the problem.

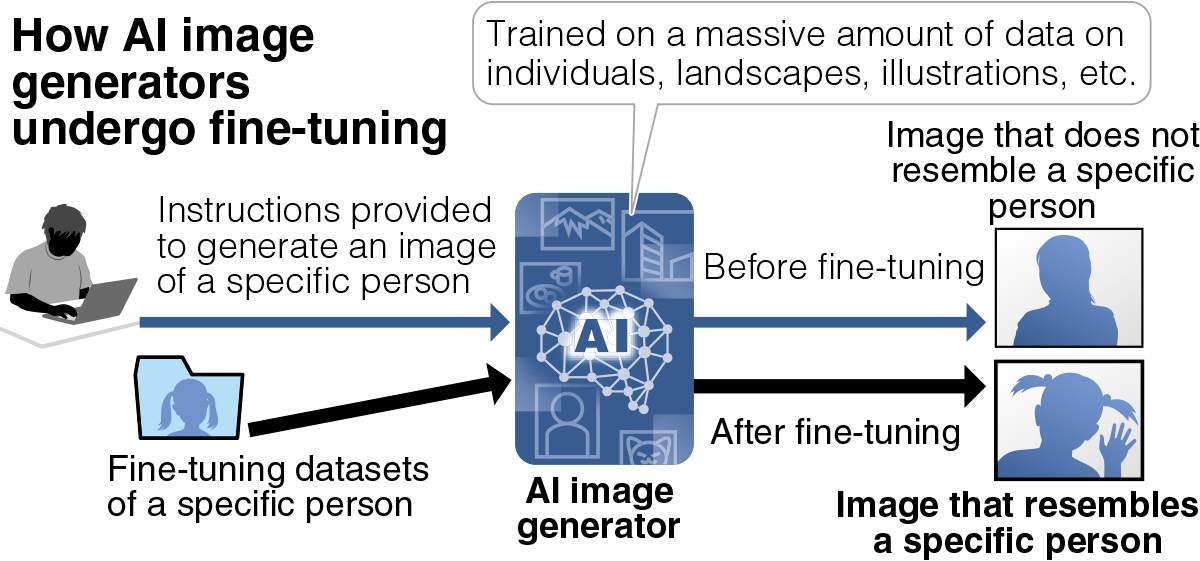

Learning from a vast amount of image data, AI image generators produce elaborate, photorealistic images through simple written instructions. Generating an image that closely resembles a specific person is difficult with just the input of their name. But some AI image generators can create images that closely resemble particular people if trained on fine-tuning datasets containing dozens of images of that person. This fine-tuning data is called “LoRA” among other names.

The Yomiuri Shimbun identified an English-language website selling fine-tuning datasets on several former child celebrities who have worked in Japan and abroad. The site listed the names of actual personalities in the descriptions of the data, and each was being sold for the equivalent of $3 in crypto assets. The site also offered data on adult women.

A separate Japanese site was selling sexual images closely resembling Japanese child celebrities on whom fine-tuning data was available to buy, and the images were marked as having been produced by AI. According to some experts, the characteristics of the images indicate that they were likely produced using fine-tuning data.

AI image generators can quickly produce a massive number of images, and the subject’s pose and facial expression can be freely set. If fine-tuning datasets on real people are circulated, sexually explicit images that closely resemble not only real children but also real adults may spread widely.

According to the Justice Ministry, the law against child prostitution and child pornography is applicable only when a child victim exists. Court precedents show that even computer-generated images can be subject to regulations if they appear to depict a specific real child. However, some experts say that the law may not apply unless the physical and facial features of the generated images closely resemble a particular existing child. Therefore, whether AI-generated sexual images of children can be regulated remains to be seen.

“The finding uncovered the fact that generative AI and fine-tuning datasets are being misused to violate the rights of children,” said Prof. Takashi Nagase of Kanazawa University, a former judge well versed in issues related to online speech and expression. “The fear is that the damage may be spreading under the surface. This is a situation unanticipated by the current law, so it’s necessary to consider establishing relevant legislation to regulate AI-generated sexual images of children.”

Top Articles in Society

-

Producer Behind Pop Group XG Arrested for Cocaine Possession

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo Station

-

Man Infected with Measles May Have Come in Contact with Many People in Tokyo, Went to Store, Restaurant Around When Symptoms Emerged

-

Woman with Measles Visited Hospital in Tokyo Multiple Times Before Being Diagnosed with Disease

-

Australian Woman Dies After Mishap on Ski Lift in Nagano Prefecture

JN ACCESS RANKING

-

Producer Behind Pop Group XG Arrested for Cocaine Possession

-

Japan PM Takaichi’s Cabinet Resigns en Masse

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo Station

-

Israeli Ambassador to Japan Speaks about Japan’s Role in the Reconstruction of Gaza

-

Videos Plagiarized, Reposted with False Subtitles Claiming ‘Ryukyu Belongs to China’; Anti-China False Information Also Posted in Japan