Examining Generative AI: Cutting Through the Chaos / Competition Accelerates AI Development, Overrides Caution; Warning Voices Left Behind In Latest Tech Gold Rush

OpenAI Chief Executive Officer Sam Altman

The Yomiuri Shimbun

6:00 JST, November 25, 2024

This is the fourth installment in a series examining how society should deal with generative artificial intelligence (AI).

***

“AI systems with human-competitive intelligence can pose profound risks to society and humanity … Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

These lines are excerpted from an open letter issued in March last year by the U.S. nonprofit organization Future of Life Institute (FLI) in the heat of ChatGPT sweeping the world.

The letter warns of a risk that humans will lose control of AI if its development continues as it is, and calls for pausing the development of powerful AI systems. The letter was supported and signed by such prominent figures as entrepreneur Elon Musk and historian Yuval Noah Harari among many others.

The signatory list also includes the names of Japanese researchers. Keio University Prof. Satoshi Kurihara, the president of the Japanese Society for Artificial Intelligence, said: “The hasty release of AI into the world will cause confusion. AI developers and users should stop for a moment and think about the various issues involved.”

University of Tokyo Prof. Emeritus Yoshihiko Nakamura, a robot researcher, said: “Conversations with AI may have a major impact on human intellectual activity. I think we all need to think about this.” Leaders in various fields were increasingly concerned about rapidly spreading generative AI.

‘Public will pay price’

Tesla CEO and X owner Elon Musk

However, the movement quickly petered out. OpenAI, Microsoft Corp., and Google LLC all released new AIs and related services one after another. The development race intensified with an expectation of the huge market of the future, and the stock market was abuzz with the generative AI boom.

“In the midst of the huge movement of capital, the open letter was powerless,” Nakamura said.

Just four months after the letter’s release, Musk announced the formation of a new company, xAI, effectively throwing his signature into a dust bin.

“Businesses can’t stop development as they’re up against rivals. Humans are creatures that can’t stop themselves after all,” Kurihara said, expressing his complex feelings about the development race.

AI developers themselves have expressed a sense of crisis over the idea that their excessive haste to release new services has resulted in neglect of safety measures.

At OpenAI, which became one of the world’s largest startups with the explosive spread of its ChatGPT, key executives left one after another, apparently rebelling against the company’s shift to a focus on profit.

“Over the past years, safety culture and processes have taken a backseat to shiny products,” Jan Leike, who was in charge of OpenAI’s safety measures, wrote in a post criticizing its management team on X (formerly Twitter) when he left the company in May. “OpenAI must become a safety-first AGI [artificial general intelligence] company.”

University of Toronto Prof. Emeritus and AI scientist Geoffrey Hinton criticized OpenAI at a press conference held on Oct. 8 when he won the 2024 Nobel Prize in Physics. “Over time, it turned out that [OpenAI Chief Executive Officer] Sam Altman was much less concerned with safety than with profits. And I think that’s unfortunate,” he said.

On Oct. 2, OpenAI announced that it had raised $6.6 billion (about ¥1 trillion) in new funding, a sign of further acceleration of generative AI development. It has also been reported that the company would be reorganized from its current nonprofit-oriented structure to a more profit-oriented one. In the face of business logic, the company’s founding mission to ensure “AGI benefits all of humanity” appears to be wavering.

The FLI’s open letter was endorsed by over 33,000 signatories. FLI’s Communications Director Ben Cumming is deeply concerned about the development race by AI corporations such as big tech firms.

“Intense competitive pressures will force them [AI corporations] to cut corners safety-wise, and it is the public that will pay the price,” Cumming said.

Related Articles “Examining Generative AI: Cutting Through the Chaos”

Most Read

Popular articles in the past 24 hours

-

Milano Cortina 2026: Ceremony for Winter Paralympic Torch Relay T...

-

WBC to Begin: All the Pieces are in Place for Japan’s Second Stra...

-

How Trump Assassination Attempts Played into His Decision to Atta...

-

Tokyo High Court Upholds Unification Church Dissolution Order

-

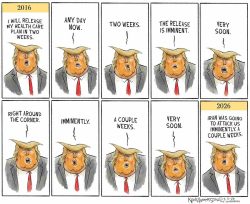

CARTOON OF THE DAY (March 4)

-

PM Sanae Takaichi: Iran Situation Won’t Immediately Affect Electr...

-

Trump's Asian Allies Fear Iran War Will Sap Defenses against Chin...

-

Japanese Speedskater Takagi Announces Retirement

Popular articles in the past week

-

Tokyo Spends Big on Children, Wins Over Parents

-

Japan’s Miura, Kihara Announce Withdrawal from Figure Skating Wor...

-

South Korea Tightens Rules on Foreigners Buying Homes in Seoul Me...

-

BOJ Keeping Eye on Economy and Takaichi's ‘Proactive Fiscal Polic...

-

Tokyo Measles Patient Traveled to Fukuoka Aboard JAL Planes; Susp...

-

Nidec Chairman Resigns Amid Accounting Scandal at Major Japanese ...

-

Strait of Hormuz Closure Shakes Markets; Prolonged Closure Could ...

-

Tourists Ignore Safety Barriers Near Famous Zao ‘Snow Monsters’ i...

Popular articles in the past month

-

Producer Behind Pop Group XG Arrested for Cocaine Possession

-

Japan PM Takaichi’s Cabinet Resigns en Masse

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo...

-

Israeli Ambassador to Japan Speaks about Japan’s Role in the Reco...

-

Videos Plagiarized, Reposted with False Subtitles Claiming ‘Ryuky...

-

Prudential Life Insurance Plans to Fully Compensate for Damages C...

-

Woman with Measles Visited Hospital in Tokyo Multiple Times Befor...

-

iPS Treatments Pass Key Milestone, but Broader Applications Far f...

Top Articles in Society

-

Producer Behind Pop Group XG Arrested for Cocaine Possession

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo Station

-

Woman with Measles Visited Hospital in Tokyo Multiple Times Before Being Diagnosed with Disease

-

Bus Carrying 40 Passengers Catches Fire on Chuo Expressway; All Evacuate Safely

-

Tokyo Skytree’s Elevator Stops, Trapping 20 People; All Rescued (Update 1)

JN ACCESS RANKING

-

Producer Behind Pop Group XG Arrested for Cocaine Possession

-

Japan PM Takaichi’s Cabinet Resigns en Masse

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo Station

-

Israeli Ambassador to Japan Speaks about Japan’s Role in the Reconstruction of Gaza

-

Videos Plagiarized, Reposted with False Subtitles Claiming ‘Ryukyu Belongs to China’; Anti-China False Information Also Posted in Japan