Artificial Intelligence Pollutes Web with Fake Info;Bogus Images, Data Often More Convincing than Reality

False information that appears to be real is proliferating in digital space.

8:00 JST, February 15, 2025

When we think of “pollution,” it’s easy to imagine the natural environment. Microplastics are spreading in the oceans, for example, and there is concern about their negative impact on living creatures.

However, in this digital era, pollution is not limited to the natural environment. The development of artificial intelligence is spreading contaminants to digital spaces connected by the internet. These contaminants are not toxic substances, but disinformation.

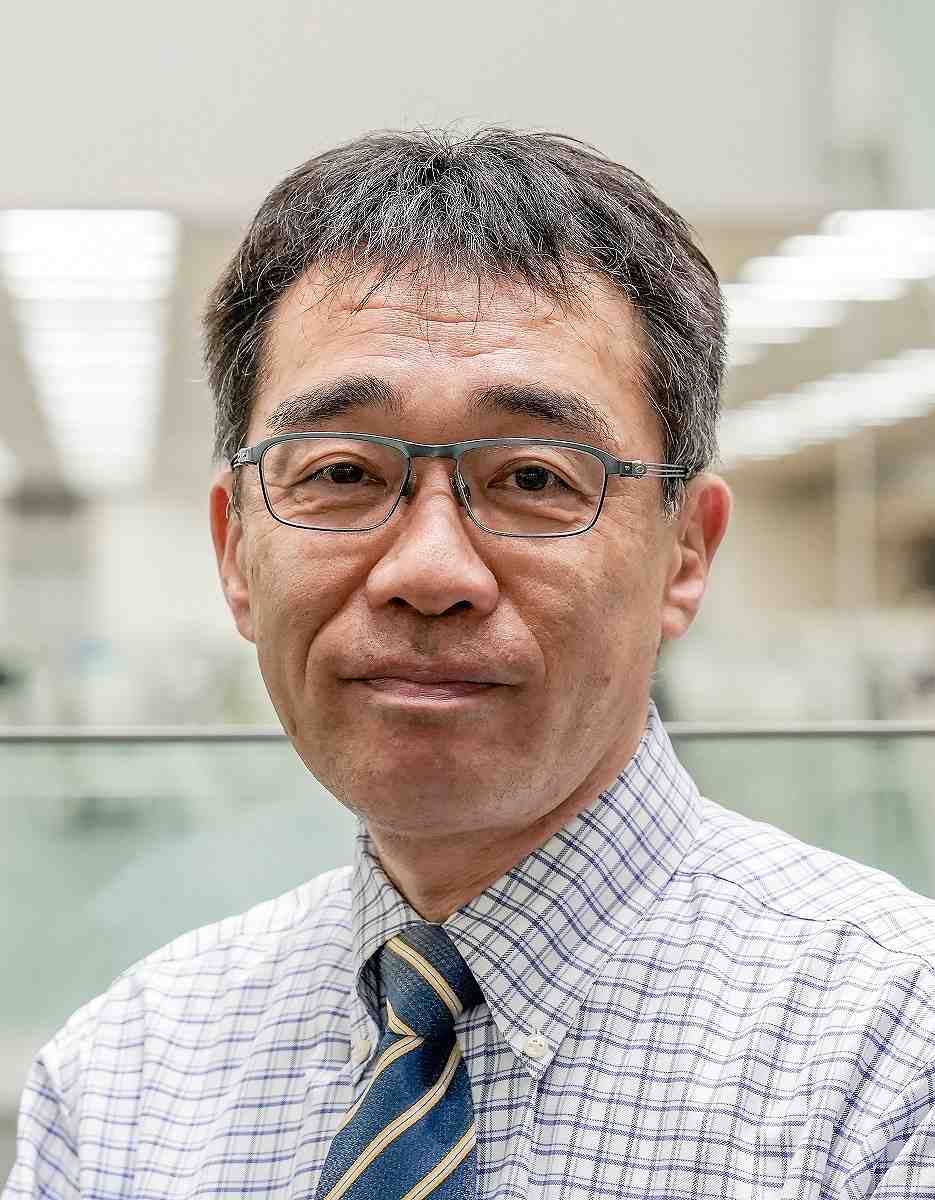

“It is becoming difficult to distinguish whether information is fact or false,” said Kazutoshi Sasahara, a professor of computational social science at the Institute of Science Tokyo.

Sasahara cited a paper published in the journal Science Advances in June 2023 by a Swiss research team.

In their research, they used GPT-3, a generative AI developed by OpenAI to create factual and false posts for Twitter (now X). The team also collected actual tweets and put them side-by-side and asked research participants to judge whether they were factual or false. The chosen posts were about vaccine safety, climate change and other topics on which there is a lot of false information on the internet.

The results showed that false tweets created by generative AI tended to be perceived as “factual” more than false information created by people, while factual tweets made by AI tended to be perceived as more plausible than tweets written by people. “The AI’s writing is more persuasive,” Sasahara remarked. AI, which has learned from a vast amount of data, seems to persuade people more convincingly with its uncluttered sentences. Sentences written by humans tend to include typos and grammatical errors.

Images created by generative AI are also beginning to surpass reality. In an experiment conducted by a team of researchers in Australia and other countries, an image of a human face created by AI and a photo of a real face were shown, and participants were asked whether the images were made by AI or not. The AI-generated images were more likely to be judged as real than the real images were. The research team termed this phenomenon “AI hyperrealism.”

In the digital space, AI-created information appears more realistic than real data, and sensational AI-generated fake images are flooding the internet, garnering a lot of clicks.

False information on social media caused confusion in rescue operations for the Noto Peninsula Earthquake in January last year.

The quality of AI-made false information has been improving rapidly, and the quantity has exploded. The only way to promote countermeasures against false information made by AI, which requires enormous amounts of information processing to detect, is also to rely on AI.

Last October, nine organizations from industry and academia, including Fujitsu and the National Institute of Informatics, began research and development for a system to determine the authenticity of online information using AI. Sasahara, who is participating in the development of the system, said: “There is no magic bullet. We need to work on various aspects, such as raising the level of technology, public literacy on false information, and legal regulations.”

Pollution easily permeates the natural environment, but countermeasures take time and effort. The same is true in digital space.

Political Pulse appears every Saturday.

Makoto Mitsui

Makoto Mitsui is a Senior Research Fellow at Yomiuri Research Institute.

Top Articles in Editorial & Columns

-

Riku-Ryu Pair Wins Gold Medal: Their Strong Bond Leads to Major Comeback Victory

-

40 Million Foreign Visitors to Japan: Urgent Measures Should Be Implemented to Tackle Overtourism

-

China Provoked Takaichi into Risky Move of Dissolving House of Representatives, But It’s a Gamble She Just Might Win

-

University of Tokyo Professor Arrested: Serious Lack of Ethical Sense, Failure of Institutional Governance

-

Policy Measures on Foreign Nationals: How Should Stricter Regulations and Coexistence Be Balanced?

JN ACCESS RANKING

-

Japan PM Takaichi’s Cabinet Resigns en Masse

-

Japan Institute to Use Domestic Commercial Optical Lattice Clock to Set Japan Standard Time

-

Israeli Ambassador to Japan Speaks about Japan’s Role in the Reconstruction of Gaza

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo Station

-

Videos Plagiarized, Reposted with False Subtitles Claiming ‘Ryukyu Belongs to China’; Anti-China False Information Also Posted in Japan