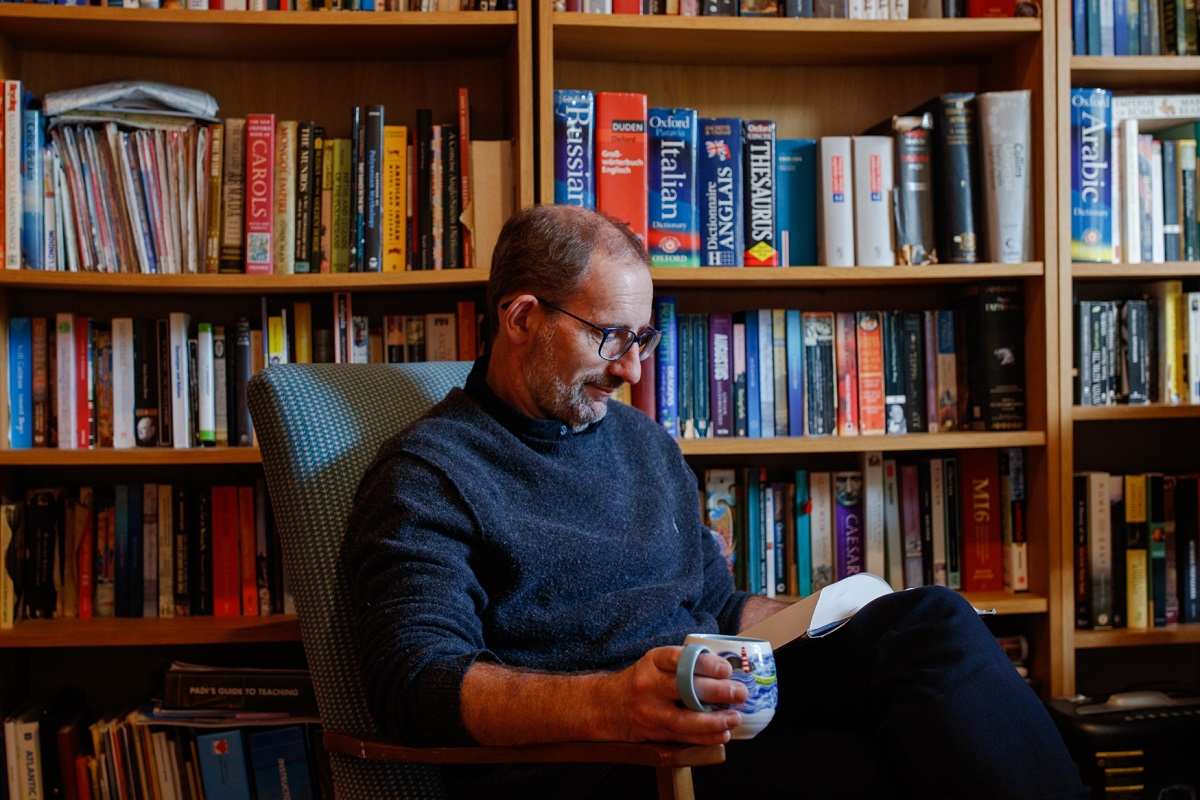

Ben Nimmo, a threat intelligence investigator at OpenAI, at his home in Scotland.

13:49 JST, October 16, 2024

As key national security and intelligence officials prepared in June for the crucible of the presidential race, a new employee of OpenAI found himself being whisked around Washington to brief them on an emerging peril from abroad.

Ben Nimmo, the principal threat investigator for the high-profile AI pioneer, had uncovered evidence that Russia, China and other countries were using its signature product, ChatGPT, to generate social media posts to sway political discourse online. Nimmo, who had started at OpenAI only in February, was taken aback when he saw that government officials had printed out his report, with key findings about the operations highlighted and tabbed.

That attention underscored Nimmo’s place at the vanguard in confronting the dramatic boost that artificial intelligence can provide to foreign adversaries’ disinformation operations. In 2016, he was one of the first researchers to identify how the Kremlin interfered in U.S. politics online. Now tech companies, government officials and other researchers are looking to him to ferret out foreign adversaries who are using OpenAI’s tools to stoke chaos in the tense weeks before Americans vote on Nov. 5.

So far, the 52-year-old Englishman says Russia and other foreign actors are largely “experimenting” with AI, often in amateurish and bumbling campaigns that have limited reach with U.S. voters. But OpenAI and the U.S. government are bracing for Russia, Iran and other hostile nations to become more effective with AI, and their best hope of parrying that is by exposing and blunting operations before they gain traction.

Katrina Mulligan, a former Pentagon official who accompanied Nimmo to the meetings, said it’s essential to build that “muscle memory” when the bad actors are making dumb mistakes.

“You’re not going to catch the really sophisticated actors if you don’t start there,” said Mulligan, who now leads national security policy and partnerships for OpenAI.

On Wednesday, Nimmo released a report that said OpenAI had disrupted four operations that targeted elections around the world this year, including an Iranian effort that sought to exacerbate America’s partisan divide with social media comments and long-form articles about U.S. politics, the conflict in Gaza and Western policies toward Israel.

Nimmo’s report said OpenAI had uncovered 20 operations and deceptive networks worldwide this year. In one, an Iranian was using ChatGPT to refine malicious software intended to compromise Android devices. In another, a Russian firm used ChatGPT to generate fake news articles and the company’s image generator, Dall-E, to craft lurid, cartoonish pictures of warfare in Ukraine to better attract eyeballs in crowded social media feeds.

None of those posts, Nimmo emphasized, went viral. But he said he remains on high alert because the campaign isn’t over.

Researchers say Nimmo’s reports for OpenAI are essential as other companies, especially X (formerly Twitter), pull back from countering disinformation. But some of his peers in the field worry that OpenAI may be minimizing how its products can supercharge threats in a heated election cycle.

“He works for a corporation,” said Darren Linvill, a professor studying disinformation at Clemson University. “He has certain incentives to downplay the impact.”

Nimmo says he measures impact using a scale he developed before the 2020 election, when he was working for the social media research firm Graphika.

The election is high stakes for OpenAI, recently valued at $157 billion. Some policymakers are wary that the company’s rollout of ChatGPT, Dall-E and other products could hand U.S. enemies a powerful new tool to confuse American voters, perhaps in ways that exceed Russia’s campaign to sway the 2016 election. Bringing on Nimmo in February was widely seen as the company’s bid to avoid some of the missteps that social media companies, such as Meta, made in previous electoral cycles.

But Nimmo is one of only a handful of people working full-time at OpenAI on threat investigations. Other major tech companies, including Meta, where Nimmo was previously the global lead for threat intelligence, field far larger teams.

Amid scrutiny of OpenAI’s approach, Nimmo has started repeating what he calls a misquote from Harry Potter: “We can use magic, too.”

“I’m not a techie by any stretch of the imagination, but I get to use AI,” said Nimmo, speaking on Zoom from a library in his Scottish home shelved with books featuring his favorite investigator, Sherlock Holmes. “If there’s any AI revolution going on, that’s it. It’s the ability to scale for investigative analysis and capability.”

The problem, of course, is that malign actors are availing themselves of the same revolutionary capability.

From trombone toting to troll hunting

Russian propaganda hit Ben Nimmo with a headbutt to the face.

A drunk rioter in 2007 smashed the then-journalist’s nose while he was reporting on violent protests in Estonia after Russian state television and radio stations stoked long-running tensions over the Baltic country’s complicated history with the Soviet Union.

That blow to the head set the self-described “medievalist by training” up for a career as one of the world’s preeminent hunters of Russian trolls, powered in large part by his trained eye for textual anomalies and patterns. Militaries ran influence operations as early as 1275 B.C., he said; what’s new today is the technology that enables them to spread like wildfire.

After studying at Cambridge, he worked as a scuba diving instructor. When he was 25, his close friend was murdered while they were on a volunteer expedition in Belize. He honored her memory by spending nine months walking from Canterbury, England, to Santiago de Compostela, Spain, with a trombone on his back, playing jazz all along the way.

As he traveled around the world, including throughout the Baltics as a reporter for the Deutsche Presse-Agentur, Nimmo honed his foreign language skills. He has read “Lord of the Rings” in 10 languages and says he speaks seven – including, usefully, Russian.

In 2011, he became a press officer for the North Atlantic Treaty Organization, where he observed a “constant” flow of Russian disinformation. He began to study influence operations full-time after the 2014 annexation of Crimea, becoming part of a small community of researchers studying how Russian propaganda was evolving online.

In November 2016, as one of the founders of the Atlantic Council’s Digital Forensic Research Lab, Nimmo published an op-ed for CNN titled “How Russia is trying to rig the US election,” where he noted that it appeared the Kremlin was attempting “to shift the debate” on platforms “with a network of fake, automated (‘bot’) and semi-automated (‘cyborg’) accounts.”

At the time, Facebook CEO Mark Zuckerberg said it was “crazy” to suggest that falsehoods on his platform could influence the outcome of the U.S. election. It took years for journalists, researchers and government investigators to uncover the extent of the social media campaign by the Russia-based Internet Research Agency to seed tailored ads, posts and videos to help elect Donald Trump and sow public discord.

Nimmo, a medievalist by training, says he is “not a techie by any stretch of the imagination.”

Nimmo was well positioned to translate researchers’ findings to policymakers and the public. In blog posts, he shared tips on how to spot bots on Twitter and detailed how a Russian Twitter account masquerading as an American fooled the 2016 Trump campaign.

Nimmo’s background in literature proved useful, too, as he studied networks of automated accounts. During one investigation into a network spreading pro-China political narratives online, he noticed strange strings of text, including one that mentioned Van Helsing, the vampire-fighting doctor in Bram Stoker’s classic novel. Nimmo soon realized that the bot network operator had filled the fake bios of each account with text from the e-book of “Dracula,” and, ultimately, named the network after the vampire.

In 2019, he published a report for Graphika on “Spamouflage,” fake accounts on YouTube, Twitter and Facebook that China leveraged to amplify attacks on Hong Kong protesters. The Spamouflage network has evolved over the years, and Graphika recently reported that it has become more aggressive in its efforts to sway U.S. political discourse ahead of the election.

In February 2021, Nimmo was hired by Facebook, now called Meta, to lead global threat investigations. He became the face of the company’s efforts to secure the 2022 midterms from foreign interference, exposing a network of China-based accounts that posed as liberal Americans living in Florida, Texas and California posting criticism of the Republican Party.

After three years at Meta, he was drawn in February to his position at OpenAI, which afforded him a new vantage point on how Russia and other actors were abusing AI to amplify disinformation campaigns. About three months after he started, the company released a report saying Nimmo had discovered Russia, China, Iran and Israel using its tools to spread propaganda. Nimmo named one operation “Bad Grammar” because it was prone to mistakes, such as posting common replies from ChatGPT refusing to answer a question.

Nimmo typically starts his day of threat hunting from his home in the Scottish countryside by checking reports of suspicious activity that his automated tools have drawn in overnight – and by brewing a cup of tea. He’s skeptical of the kettles in U.S. hotels, so if he’s visiting OpenAI’s San Francisco headquarters or meeting with officials in Washington, he packs a travel kettle in his bag.

The work has also come with personal challenges. In 2017, a Russian bot network impersonating one of Nimmo’s co-workers claimed he died. In 2018, he received a flood of death threats, including one that warned Nimmo he would be hanged next to the queen. He has had to increase his cybersecurity defenses and jokes that there is a growing list of countries where he won’t go on holiday anytime soon.

When the toll of the online discourse becomes too much, he goes for walks in the fields surrounding his home.

“This is a job where you do it because it really matters,” he said, and then pointed to his slightly askew nose.

“Ever since 2007, I’ve known it’s mattered. My face reminds me that it matters.”

Top Articles in News Services

-

Prudential Life Expected to Face Inspection over Fraud

-

South Korea Prosecutor Seeks Death Penalty for Ex-President Yoon over Martial Law (Update)

-

Trump Names Former Federal Reserve Governor Warsh as the Next Fed Chair, Replacing Powell

-

Suzuki Overtakes Nissan as Japan’s Third‑Largest Automaker in 2025

-

Japan’s Nikkei Stock Average Alls from Record as Tech Shares Retreat; Topix Rises (UPDATE 1)

JN ACCESS RANKING

-

Univ. in Japan, Tokyo-Based Startup to Develop Satellite for Disaster Prevention Measures, Bears

-

JAL, ANA Cancel Flights During 3-day Holiday Weekend due to Blizzard

-

Japan Institute to Use Domestic Commercial Optical Lattice Clock to Set Japan Standard Time

-

China Eyes Rare Earth Foothold in Malaysia to Maintain Dominance, Counter Japan, U.S.

-

Japan, Qatar Ministers Agree on Need for Stable Energy Supplies; Motegi, Qatari Prime Minister Al-Thani Affirm Commitment to Cooperation