Voters Using AI to Choose Candidates in Japan’s Upcoming General Election; ChatGPT, Other AI Services Found Providing Incorrect Information

Logos of major generative AI companies are seen on a smartphone screen.

2:00 JST, February 3, 2026

An increasing number of people are using generative artificial intelligence to help choose which candidates or parties to vote for in the upcoming House of Representatives election.

Although the technology has enabled voters to obtain information conveniently and efficiently, caution is needed, as AI-generated answers may be incorrect.

“People need to confirm whether the source of their information is trustworthy when making a decision about what to do with their very important ballot,” said one expert on the issue.

One 42-year-old male company employee transferred away from his hometown in Shizuoka Prefecture to Tokyo. He asks ChatGPT, a conversational generative AI, about the situation in his home constituency and each candidate’s chances of winning or losing.

He regards ChatGPT as a “buddy” who gives him advice on things like workplace relationships.

After the media reported in January on the possibility of a lower house dissolution, the man asked ChatGPT such questions as “What will be the negative effects on things like education expenses?”

Sometimes he felt that the AI might have only been telling him what he wanted to hear, so he added directions such as “Give me advice from the viewpoint of a neutral third party.”

On Jan. 27, when the campaigning period for the general election kicked off, he attended street speeches by party heads in Akihabara.

“I’ll decide which candidate or party to vote for by hearing what they actually pledge and getting an idea of their personalities,” he said.

Some eligible voters are also using Grok, another conversational generative AI, which is part of the X social media platform.

When a user posts a question on X with “@Grok” in the body, the AI replies with an answer.

The Yomiuri Shimbun, using an analytical tool, counted 4,700 such posts with “House of Representatives election,” “general election” and other key words in Japanese in January alone.

A remarkable number of questions were asked about candidates’ political achievements and stances. There were also many requests for fact-checking and predictions on which parties will win how many seats in the lower house.

Some answers from Grok, however, did not accurately reflect the facts.

To a question about an opposition party candidate’s past achievements, Grok answered, “No specific achievements have been confirmed since the candidate’s election in the previous lower house poll.”

However, The Yomiuri Shimbun looked into what the candidate has done during that timeline and found that they had submitted a bill and a list of questions to the Cabinet.

There have also been cases in which generative AI gave patently false answers.

On Jan. 30, a Yomiuri Shimbun reporter asked Gemini, another conversational generative AI, a question about a Tokyo constituency where five candidates will run.

The reporter asked, “Which candidate is enthusiastic about providing assistance for raising children?” In response, the AI presented four names, but two were not real candidates. The reporter repeated the question, but the AI gave another wrong answer.

Generative AI sometimes provides incorrect information because it makes contextual mistakes when summarizing correct information or fills in gaps with fictitious information.

“People need to be aware that just because an answer was provided by AI doesn’t mean that it is necessarily accurate or unbiased,” said Prof. Kazuhiro Taira, an expert in media studies at J.F. Oberlin University. “People should fact-check the information they receive using media reports and the official website of each party or candidate.”

Top Articles in Politics

-

Japan Seeks to Enhance Defense Capabilities in Pacific as 3 National Security Documents to Be Revised

-

Japan Tourism Agency Calls for Strengthening Measures Against Overtourism

-

Japan’s Prime Minister: 2-Year Tax Cut on Food Possible Without Issuing Bonds

-

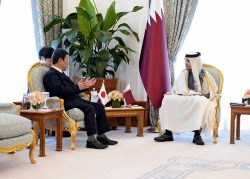

Japan-South Korea Leaders Meeting Focuses on Rare Earth Supply Chains, Cooperation Toward Regional Stability

-

Japanese Government Plans New License System Specific to VTOL Drones; Hopes to Encourage Proliferation through Relaxed Operating Requirements

JN ACCESS RANKING

-

Univ. in Japan, Tokyo-Based Startup to Develop Satellite for Disaster Prevention Measures, Bears

-

JAL, ANA Cancel Flights During 3-day Holiday Weekend due to Blizzard

-

China Confirmed to Be Operating Drilling Vessel Near Japan-China Median Line

-

China Eyes Rare Earth Foothold in Malaysia to Maintain Dominance, Counter Japan, U.S.

-

Japan Institute to Use Domestic Commercial Optical Lattice Clock to Set Japan Standard Time