Govts Across the Globe Can Work Together to Keep AI Safe; Sunak to Host Major AI Summit at Bletchley Park

U.K. Prime Minister Rishi Sunak

7:00 JST, November 1, 2023

Britain will host the first ever major global summit on artificial intelligence at Bletchley Park in central England on Nov. 1 and 2 to discuss international coordination and the roles of governments — and not just tech firms — across the world to properly assess the grave risks from AI without too hastily regulating the cutting-edge technology. World leaders including U.S. Vice President Kamala Harris and European Commission President Ursula von der Leyen are expected to attend the event. U.K. Prime Minister Rishi Sunak wrote the following contribution to The Japan News.

I believe nothing in our foreseeable future will transform our lives more than artificial intelligence. Like the coming of electricity or the birth of the internet, it will bring new knowledge, new opportunities for economic growth, new advances in human capability, and the chance to solve global problems we once thought beyond us.

AI can help solve world hunger by preventing crop failures and making it cheaper and easier to grow food. It can help accelerate the transition to net zero. And it is already making extraordinary breakthroughs in health and medicine, aiding us in the search for new dementia treatments and vaccines for cancer.

But like previous waves of technology, AI also brings new dangers and new fears. So, if we want our children and grandchildren to benefit from all the opportunities of AI, we must act — and act now — to give people peace of mind about the risks.

What are those risks? For the first time, the British government has taken the highly unusual step of publishing our analysis, including an assessment by the U.K. intelligence community. As prime minister, I felt this was an important contribution the U.K. could make, to help the world have a more informed and open conversation.

Our reports provide a stark warning. AI could be used for harm by criminals or terrorist groups. The risk of cyber-attacks, disinformation, or fraud pose a real threat to society. And in the most unlikely but extreme cases, some experts think there is even the risk that humanity could lose control of AI completely, through the kind of AI sometimes referred to as “super intelligence.” We should not be alarmist about this. There is a very real debate happening, and some experts think it will never happen.

But even if the very worst risks are unlikely to happen, they would be incredibly serious if they do. So, leaders around the world, no matter our differences on other issues, have a responsibility to recognize those risks, come together, and act. Not least because many of the loudest warnings about AI have come from the people building this technology themselves. And because the pace of change in AI is simply breathtaking: every new wave will become more advanced, better trained, with better chips, and more computing power.

So, what should we do? First, governments do have a role. The U.K. has just announced the first ever AI Safety Institute. Our institute will bring together some of the most respected and knowledgeable people in the world. They will carefully examine, evaluate, and test new types of AI so that we understand what they can do. And we will share those conclusions with other countries and companies to help keep AI safe for everyone.

But AI does not respect borders. No country can make AI safe on its own. So our second step must be to increase international cooperation. That starts this week at the first ever Global AI Safety Summit, which I’m proud the U.K. is hosting.

What do we want to achieve at this week’s summit? I want us to agree the first ever international statement about the risks from AI. Because right now, we don’t have a shared understanding of the risks we face. And without that, we cannot work together to address them.

I’m also proposing that we establish a truly global expert panel, nominated by those attending the summit, to publish a State of AI Science report. And over the longer term, my vision is for a truly international approach to safety, where we collaborate with partners to ensure AI systems are safe before they are released.

None of that will be easy to achieve. But leaders have a responsibility to do the right thing. To be honest about the risks. And to take the right long-term decisions to earn people’s trust, giving peace of mind that we will keep you safe. If we can do that, if we can get this right, then the opportunities of AI are extraordinary. And we can look to the future with optimism and hope.

Top Articles in Editorial & Columns

-

Riku-Ryu Pair Wins Gold Medal: Their Strong Bond Leads to Major Comeback Victory

-

40 Million Foreign Visitors to Japan: Urgent Measures Should Be Implemented to Tackle Overtourism

-

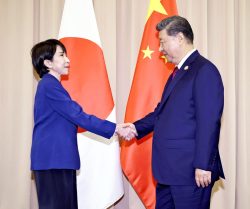

China Provoked Takaichi into Risky Move of Dissolving House of Representatives, But It’s a Gamble She Just Might Win

-

University of Tokyo Professor Arrested: Serious Lack of Ethical Sense, Failure of Institutional Governance

-

Policy Measures on Foreign Nationals: How Should Stricter Regulations and Coexistence Be Balanced?

JN ACCESS RANKING

-

Japan PM Takaichi’s Cabinet Resigns en Masse

-

Japan Institute to Use Domestic Commercial Optical Lattice Clock to Set Japan Standard Time

-

Israeli Ambassador to Japan Speaks about Japan’s Role in the Reconstruction of Gaza

-

Man Infected with Measles Reportedly Dined at Restaurant in Tokyo Station

-

Videos Plagiarized, Reposted with False Subtitles Claiming ‘Ryukyu Belongs to China’; Anti-China False Information Also Posted in Japan